|

|

|

|

|

2005 JLab News Release

|

||||||

Supercomputing on a Shoestring: Cluster Computers at JLabNovember 7, 2005Cluster computers are tackling computations that were once reserved for the most powerful supercomputers. The people behind these technological wonders are the members of Jefferson Lab's High Performance Computing Group, led by Chip Watson. So what is high performance computing? "It's using large computing resources to calculate something. In our case, we're calculating the theory that corresponds to the experiments that run at Jefferson Lab," Watson says. That theory is quantum chromodynamics, or QCD, and it describes the strong force that dictates how quarks and gluons build protons, neutrons and other particles. He says the theory can't be solved directly with pencil and paper. "The equations are too complicated. You have to use numerical techniques on a computer to solve these equations," Watson explains.  The High Performance Computing Group in front of the newest cluster computer. (l-r) Jie Chen, Sherman White, Chip Watson, Ying Chen, and Balint Joo. The problem that is to be solved is fed into the first processor of the cluster, which then shares the problem with the other processors. "The problem is divided up into smaller parts, so each computer works on one part of the problem. And then we use a high-speed network to connect all the computers together so they can communicate all their partial solutions to each other," Watson explains. The first processor then collects all the partial solutions and forwards the calculation out of the cluster, where it can be saved.  Employees work to unpack components of and install the 2004 cluster computer. The completed cluster, called 4G, consists of 384 2.8 GHz Intel Pentium 4 Xeon computers connected with ethernet cables. Each new cluster is more powerful than the last, since the performance of commercially available computers is increasing, even as the price drops. By using off-the-shelf components, the group is able to build a supercomputer-class machine without paying a supercomputer-class price. "What we try to optimize is science per dollar, not peak performance," Watson iterates. For instance, the latest cluster, installed in 2004, is fast enough for a place on the Top500 Supercomputer Sites List. "Today we're using computers of order a teraflop, which means they can produce, they do a million million operations per second that are useful to the application," Watson says. However, even though the system's average performance is good enough for the list, you won't see it there. Watson says it isn't optimized for performing the computations used to determine a supercomputer's peak performance for the list.

The 2003 machine is a 256-processor cluster computer built of 2.67 GHz Intel Xeon processors. LQCD is the numerical approach to solving QCD, the theory that describes the strong force that dictates how quarks and gluons build protons, neutrons and other particles. LQCD is being used to calculate how subatomic particles behave. It's a miniature, mathematical representation of the minuscule space between subatomic particles. A typical problem might be a calculation of the properties of the nucleon (a proton or a neutron) and its excited states, which are produced when a nucleon has extra energy. Another example of a typical calculation, which is currently underway, is a determination of the electromagnetic form factors of the nucleon, which describe the distribution of electric charge and current inside the nucleon.

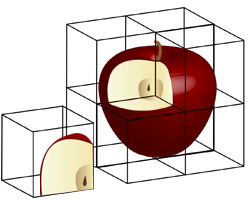

To calculate the solution to a science problem, a cluster computer slices space up into cubes. Each processor in the cluster is responsible for its own little cube of space as time passes, while also calculating the effects that neighboring cubes have on the material inside its own cube. For instance, in this simple conceptual drawing, one processor would be responsible for the chunk of apple on the left, while calculating the effects the rest of the apple has on its chunk. Watson says a typical problem put to the cluster computers at JLab takes about a year to calculate. "But, of course, we don't run anything for a year, because if something went wrong nine months into it, then we would have lost everything. Instead, solving this large problem is broken up into pieces of about eight hours. The application then saves its state to disk and then restarts again. So if the computer fails at any point, we would just have to go back to the last checkpoint- or the last time the computer saved to disk," Watson explains. Computer time is allocated based on the recommendations of the United States Lattice Gauge Theory Computational Program (USQCD), a consortium of top LQCD theorists in the United States that spans both high-energy physics and nuclear physics.  A rat's nest of wires Watson says though Jefferson Lab's resources have been deployed in the form of cluster computers, SciDAC is also supporting development of supercomputers. The machines crunch and churn out answers to some of the most difficult of calculations. In fact, some of these calculations are needed to form the basis for problems that are later put to cluster computers. Fermilab is slated for a new supercomputer next year, and Jefferson Lab may be next on the list for a 1000+ node cluster computer in 2007. In the meantime, some components for the Jefferson Lab 2005 cluster have already been ordered and will be installed this winter in the spacious new computer room in the CEBAF Center Addition. Thomas Jefferson National Accelerator Facility’s (Jefferson Lab’s) basic mission is to provide forefront scientific facilities, opportunities and leadership essential for discovering the fundamental structure of nuclear matter; to partner in industry to apply its advanced technology; and to serve the nation and its communities through education and public outreach. Jefferson Lab, located at 12000 Jefferson Avenue, is a Department of Energy Office of Science research facility managed by the Southeastern Universities Research Association. content by Public Affairs maintained by webmaster@jlab.org updated January 12, 2006 |