2006 News Release

Lab Enhances Scientific Data Sharing with Cutting-Edge Connection

Andy Kowalski adjusts a cable on the network interface.

In early 2005, researchers affiliated with Hall B wanted to transfer the raw data from a recent experiment, the g11 run, from the tape silo to computers offsite. Had they been able to simply send the data out through Jefferson Lab's network connection — with no one else sending e-mail, downloading papers or reading physics news on the web — the transfer would have taken about 165 hours (almost seven days) at the Lab's previous peak data transfer rate of 155 Megabits per second (Mbps). Jefferson Lab's newly upgraded network connection is able to transfer data at a rate of up to 10 Gigabits per second (Gbps), so that same transfer can now be completed in just 2.5 hours.

Details of the New Network

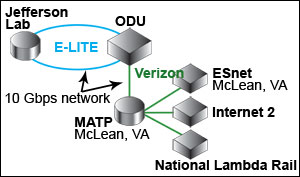

The upgrade was made possible by an intricate system of agreements and partnerships with local, regional and national research institutions and high-speed network providers. Jefferson Lab is now directly connected to the Eastern Litewave Internetworking Technology Enterprise (E-LITE), a new fiber-optic ring installed by Verizon in Hampton Roads. The E-LITE partnership is led by Old Dominion University (ODU) and includes several other Hampton Roads research centers, including The College of William & Mary, ODU's Virginia Modeling, Analysis and Simulation Center, NASA's Langley Research Center, and the U.S. Joint Forces Command.

Of particular importance is the direct connection to ODU, which hosts a node that connects E-LITE partner institutions to a statewide fiber-optic network called VORTEX, the Virginia Optical Research Technology Exchange (see illustration). Through VORTEX, Jefferson Lab has a direct connection to the Mid-Atlantic Terascale Partnership (MATP) in McLean, Va.

MATP is a consortium of research institutions in Virginia, Maryland and Washington, D.C. SURA sponsors Jefferson Lab's membership to the MATP consortium, which provides Jefferson Lab access to the Energy Sciences Network (ESnet). ESnet is Jefferson Lab's longtime internet service provider and a high-speed network serving thousands of Department of Energy scientists and collaborators worldwide. MATP also provides access to Internet2 and the National LambdaRail. The National LambdaRail is a dedicated, advanced network connecting universities, national research centers and laboratories to foster the most advanced network applications.

Up and Running

The upgraded network was fully established on September 14, after two weeks of verification, extensive testing and minor tweaks. "Jefferson Lab experimenters need to be able to share the raw data from the experiments, reconstructed data, analysis data, and simulation data. This is in addition to the normal business information that you'd want to share over the Internet," says Andy Kowalski, Deputy Computer Center Director and coordinator of the network upgrade. "Physicists will be able to transfer data from Jefferson Lab to the computing cluster at their home university, process the data there and then send the results back to Jefferson Lab. And that can now happen in an environment where the network is not the bottleneck," Kowalski says.

Curtis Meyer, a user and professor of physics at Carnegie Mellon University, says the new network's capabilities don't come a moment too soon. "Even now, the data sets from CLAS [Hall B's CEBAF Large Acceptance Spectrometer] are becoming quite large, with runs producing 10's of Terabytes of raw data now the norm. In order to continue to get good physics out of CLAS, we need to learn how to handle such large data sets, and the option needs to be available to go through them several times," he remarks.

One Terabyte of data is the equivalent of a nine-mile-high stack of standard sheets of paper printed on both sides. That reaches higher than the tallest mountain on Earth.

The upgrade allows researchers to transfer large data files up to 60 times faster than before. For instance, transferring one TeraByte of data now takes just 15 minutes, compared to 15 hours at Jefferson Lab's previous peak data transfer rate of 155 Megabits per second (Mbps). One Terabyte of data is the equivalent of a nine-mile-high stack of standard sheets of paper printed on both sides.

"This is a great achievement for Jefferson Lab. It provides researchers with a new capability in terms of more easily sharing data and data analysis," says Roy Whitney, Jefferson Lab's Chief Information Officer. "We are now able to transmit large amounts of data at an incredibly accelerated rate, which further enhances our world-class research capabilities."

Responsible Stewardship

The upgrade also puts Jefferson Lab firmly on the leading edge with its ability to provide high-speed data transfers to computers offsite. Even so, careful preparation of the data before it is sent offsite is still in the best interest of researchers and the Lab. Meyer, who led the g11 data transfer effort, says that even had the Lab been able to send data at its current pace when that transfer took place, the computers at Carnegie Mellon University would not have been able to accept the transfer at those rates or store the large amount of data effectively. To make the transfer possible, his team spent time pruning the data down to a manageable size.

Gbps – One Gigabit per second is a unit of data transfer rate equal to 1,000,000,000 bits per second.

"We spent about a month looking very carefully at all of the information that was stored in the data files. We discovered that not only was much of the data simply unused space, but there were multiple (up to six) copies of the same information. We developed a format that reduced the data to only one copy of any piece of information, threw out the space, and then packed information more tightly. Finally, we applied standard compression algorithms. On top of all this, we built a tool set that allows one to transparently access both the original data and our new compressed data in exactly the same way. In the end, we were able to reduce the 11 Terabytes of data to 600 GB."

The copy and compression took about a month, and then the transfer took place. "Now the entire data set sits live on a disk at Carnegie Mellon University, and about nine graduate students plus others are able to use it. We have probably skimmed through it at least five times with more planned," Meyer says, "So, what are we doing with this? We are carrying out partial wave analysis of photoproduction data. The ultimate goal is to look for excited baryons."

The tools instituted by the JLab Scientific Computing group and tested by Meyer's team have also been made available to other researchers, and the partial wave analysis software developed by Meyer's team has been made available as well.

Building a Brighter Future

"This upgrade also supports the future bandwidth requirements of the experimental program, the lattice QCD computing initiative, the planned 12 GeV Upgrade and a number of other projects here at Jefferson Lab," Kowalski says. The 12 GeV Upgrade project will double the energy of the electron beam and upgrade the scientific capability of four experimental halls to allow JLab scientists to delve even deeper into the mysteries of matter.

Meyer agrees. "Ultimately, we will need such tools and expertise to handle the data coming out of the Hall D GlueX experiment. As such, long-term development in this area is important," he says.

Related Stories and Links

- September 21 News Release

- Jefferson Lab Computing Center

- Carnegie Mellon University partial wave analysis information

Thomas Jefferson National Accelerator Facility’s (Jefferson Lab’s) basic mission is to provide forefront scientific facilities, opportunities and leadership essential for discovering the fundamental structure of nuclear matter; to partner in industry to apply its advanced technology; and to serve the nation and its communities through education and public outreach. Jefferson Lab, located at 12000 Jefferson Avenue, is a Department of Energy Office of Science research facility managed by the Jefferson Science Associates, LLC.